FedLPA: One-shot Federated Learning with Layer-Wise Posterior Aggregation

Jan 1, 2024·

,

,

,

,

,

·

0 min read

,

,

·

0 min read

Xiang Liu

Liangxi Liu

Feiyang Ye

Yunheng Shen

Xia Li

Linshan Jiang

Jialin Li

Abstract

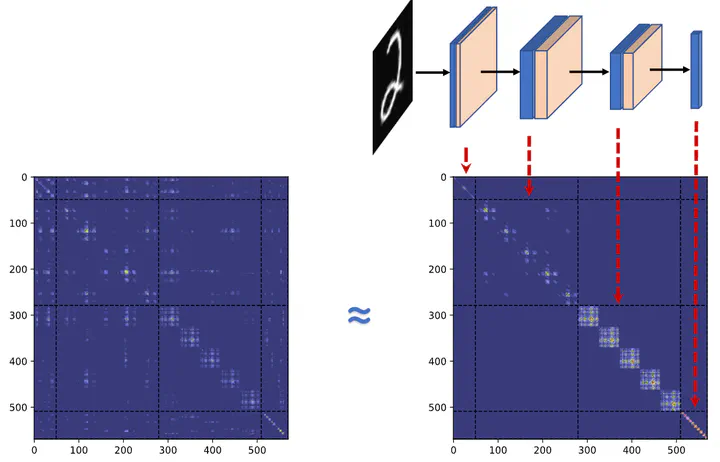

One-shot federated learning aims to train a global model with only one round of communication between clients and the server. Existing methods typically aggregate model parameters or predictions, but they often suffer from poor performance due to the limited information exchange. In this paper, we propose FedLPA, a novel one-shot federated learning method that performs layer-wise posterior aggregation. Instead of aggregating point estimates, FedLPA aggregates the posterior distributions of model parameters at each layer, which captures the uncertainty and provides richer information for aggregation. We demonstrate that FedLPA achieves superior performance compared to existing one-shot federated learning methods on various benchmark datasets.

Type

Publication

Advances in Neural Information Processing Systems